| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

- Nodejs 프로젝트

- Javascript

- DataStructure

- hyperledger

- 자바스크립트

- 블록몽키

- 깃

- 하이퍼레저

- 블록체인

- nodejs

- hyperledger fabric

- javascript 초급

- 제로초

- algorithum

- javascirpt

- vs code

- js

- al

- 파이썬 알고리즘

- 프로그래밍

- 블록체인개론

- SQL

- mysql

- 생활코딩 nodejs

- 관계형데이터베이스

- 컴퓨터사이언스

- 컴퓨터공학개론

- 생활코딩

- javascript 게임

- Blockmonkey

- Today

- Total

Blockmonkey

EKS 사용하기 본문

🌈 Kubernetes 구성 아키텍쳐

🔹 Master Node (Control Plane)

Kubernetes 전체 클러스터를 관리하는 두뇌 역할

- API Server: kubectl 같은 요청을 받아들이는 창구

- Scheduler: 어떤 Pod을 어떤 Node에 배치할지 결정

- Controller Manager: 상태 유지를 위한 자동 조정 (예: Pod이 죽으면 다시 띄움)

- etcd: Kubernetes의 설정 정보, 상태 등을 저장하는 Key-Value 저장소

🔹 Worker Nodes

실제 앱이 배포되는 컴퓨팅 자원 (EC2 등)

- Master의 명령에 따라 Pod을 실행하고 관리

- 한 개 이상의 Worker Node 존재 가능 (스케일링 대응)

🔹 Pods

Kubernetes에서 가장 작은 실행 단위 (컨테이너 한 개 or 여러 개 포함 가능)

- Spring App, ArgoCD, Redis 등 실제 앱 실행되는 곳

- Pod이 죽으면 자동으로 다시 생성됨 (by ReplicaSet 등)

🔹 Service

Pod들을 추상화해서, 안정적인 접근 경로 제공

- Pod IP는 바뀔 수 있으므로 고정된 IP/도메인 역할

- ClusterIP: 클러스터 내부 통신용

- NodePort: 외부에서 접근 가능 (노드 IP + 포트)

- LoadBalancer: 클라우드 로드밸런서 할당 (외부 트래픽용)

🌈 Kubernetes - EKS 구축해보기

- 사전 준비사항

- AWS CLI 설치 및 설정

- Helm 설치

- brea install helm 명령어를 통해 Helm 설치

- VPC 구성하기

- 2 Region 이상 구성

*기타 주의사항

EKS용 VPC 서브넷에는 아래 두개 태그를 필수적으로 붙여줘야 동작한다.

| kubernetes.io/cluster/eks-test-cluster | shared |

| kubernetes.io/role/elb | 1 |

- EKS IAM ROLE 생성하기

- 필수 요구 정책

- EKSWorkerNodePolicy, EKSCNIPolicy, EC2ContainerReadOnlyPolicy

- Example

- 필수 요구 정책

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "iam:CreateServiceLinkedRole" ], "Resource": "*", "Condition": { "StringEquals": { "iam:AWSServiceName": "elasticloadbalancing.amazonaws.com" } } }, { "Effect": "Allow", "Action": [ "ec2:DescribeAccountAttributes", "ec2:DescribeAddresses", "ec2:DescribeAvailabilityZones", "ec2:DescribeInternetGateways", "ec2:DescribeVpcs", "ec2:DescribeVpcPeeringConnections", "ec2:DescribeSubnets", "ec2:DescribeSecurityGroups", "ec2:DescribeInstances", "ec2:DescribeNetworkInterfaces", "ec2:DescribeTags", "ec2:GetCoipPoolUsage", "ec2:DescribeCoipPools", "elasticloadbalancing:DescribeLoadBalancers", "elasticloadbalancing:DescribeLoadBalancerAttributes", "elasticloadbalancing:DescribeListeners", "elasticloadbalancing:DescribeListenerCertificates", "elasticloadbalancing:DescribeSSLPolicies", "elasticloadbalancing:DescribeRules", "elasticloadbalancing:DescribeTargetGroups", "elasticloadbalancing:DescribeTargetGroupAttributes", "elasticloadbalancing:DescribeTargetHealth", "elasticloadbalancing:DescribeTags", "elasticloadbalancing:DescribeListenerAttributes" ], "Resource": "*" }, { "Effect": "Allow", "Action": [ "cognito-idp:DescribeUserPoolClient", "acm:ListCertificates", "acm:DescribeCertificate", "iam:ListServerCertificates", "iam:GetServerCertificate", "waf-regional:GetWebACL", "waf-regional:GetWebACLForResource", "waf-regional:AssociateWebACL", "waf-regional:DisassociateWebACL", "wafv2:GetWebACL", "wafv2:GetWebACLForResource", "wafv2:AssociateWebACL", "wafv2:DisassociateWebACL", "shield:GetSubscriptionState", "shield:DescribeProtection", "shield:CreateProtection", "shield:DeleteProtection" ], "Resource": "*" }, { "Effect": "Allow", "Action": [ "ec2:AuthorizeSecurityGroupIngress", "ec2:RevokeSecurityGroupIngress" ], "Resource": "*" }, { "Effect": "Allow", "Action": [ "ec2:CreateSecurityGroup" ], "Resource": "*" }, { "Effect": "Allow", "Action": [ "ec2:CreateTags" ], "Resource": "arn:aws:ec2:*:*:security-group/*", "Condition": { "StringEquals": { "ec2:CreateAction": "CreateSecurityGroup" }, "Null": { "aws:RequestTag/elbv2.k8s.aws/cluster": "false" } } }, { "Effect": "Allow", "Action": [ "ec2:CreateTags", "ec2:DeleteTags" ], "Resource": "arn:aws:ec2:*:*:security-group/*", "Condition": { "Null": { "aws:RequestTag/elbv2.k8s.aws/cluster": "true", "aws:ResourceTag/elbv2.k8s.aws/cluster": "false" } } }, { "Effect": "Allow", "Action": [ "ec2:AuthorizeSecurityGroupIngress", "ec2:RevokeSecurityGroupIngress", "ec2:DeleteSecurityGroup" ], "Resource": "*", "Condition": { "Null": { "aws:ResourceTag/elbv2.k8s.aws/cluster": "false" } } }, { "Effect": "Allow", "Action": [ "elasticloadbalancing:CreateLoadBalancer", "elasticloadbalancing:CreateTargetGroup" ], "Resource": "*", "Condition": { "Null": { "aws:RequestTag/elbv2.k8s.aws/cluster": "false" } } }, { "Effect": "Allow", "Action": [ "elasticloadbalancing:CreateListener", "elasticloadbalancing:DeleteListener", "elasticloadbalancing:CreateRule", "elasticloadbalancing:DeleteRule" ], "Resource": "*" }, { "Effect": "Allow", "Action": [ "elasticloadbalancing:AddTags", "elasticloadbalancing:RemoveTags" ], "Resource": [ "arn:aws:elasticloadbalancing:*:*:targetgroup/*/*", "arn:aws:elasticloadbalancing:*:*:loadbalancer/net/*/*", "arn:aws:elasticloadbalancing:*:*:loadbalancer/app/*/*" ], "Condition": { "Null": { "aws:RequestTag/elbv2.k8s.aws/cluster": "true", "aws:ResourceTag/elbv2.k8s.aws/cluster": "false" } } }, { "Effect": "Allow", "Action": [ "elasticloadbalancing:AddTags", "elasticloadbalancing:RemoveTags" ], "Resource": [ "arn:aws:elasticloadbalancing:*:*:listener/net/*/*/*", "arn:aws:elasticloadbalancing:*:*:listener/app/*/*/*", "arn:aws:elasticloadbalancing:*:*:listener-rule/net/*/*/*", "arn:aws:elasticloadbalancing:*:*:listener-rule/app/*/*/*" ] }, { "Effect": "Allow", "Action": [ "elasticloadbalancing:AddTags" ], "Resource": [ "arn:aws:elasticloadbalancing:*:*:targetgroup/*/*", "arn:aws:elasticloadbalancing:*:*:loadbalancer/net/*/*", "arn:aws:elasticloadbalancing:*:*:loadbalancer/app/*/*" ], "Condition": { "StringEquals": { "elasticloadbalancing:CreateAction": [ "CreateTargetGroup", "CreateLoadBalancer" ] }, "Null": { "aws:RequestTag/elbv2.k8s.aws/cluster": "false" } } }, { "Effect": "Allow", "Action": [ "elasticloadbalancing:ModifyLoadBalancerAttributes", "elasticloadbalancing:SetIpAddressType", "elasticloadbalancing:SetSecurityGroups", "elasticloadbalancing:SetSubnets", "elasticloadbalancing:DeleteLoadBalancer", "elasticloadbalancing:ModifyTargetGroup", "elasticloadbalancing:ModifyTargetGroupAttributes", "elasticloadbalancing:DeleteTargetGroup" ], "Resource": "*", "Condition": { "Null": { "aws:ResourceTag/elbv2.k8s.aws/cluster": "false" } } }, { "Effect": "Allow", "Action": [ "elasticloadbalancing:RegisterTargets", "elasticloadbalancing:DeregisterTargets" ], "Resource": "arn:aws:elasticloadbalancing:*:*:targetgroup/*/*" }, { "Effect": "Allow", "Action": [ "elasticloadbalancing:SetWebAcl", "elasticloadbalancing:ModifyListener", "elasticloadbalancing:AddListenerCertificates", "elasticloadbalancing:RemoveListenerCertificates", "elasticloadbalancing:ModifyRule" ], "Resource": "*" } ] }- EKS - Cluster 생성 - eks-test-cluster

- 설정

- Custom Configuration으로 진행.

- EKS Auto Mode : 클러스터 관리모드로 ALB, EBS 연결 관리 등 알아서 해주는 옵션 → 비활성화

- Kubernetes version settings : 쿠버네티스 버전을 설정하는 부분

- Standard : 14개월 동안 버전 지원 → 이후 자동업그레이드 (비용없음)

- Extended : 26개월 동안 지원 연장 → 14개월 후 요금발생

- 개발용 : Standard / 실제 운영 시 : Extended 모드 권장

- Auto Mode Compute : Node를 수동, 자동 추가 관리 설정

- 추후 Helm, ArgoCD를 통해 진행하므로 → 비활성화

- 설정

- EKS - Node Group 생성 - eks-test-node-group

- Cluster - Compute - Node groups - Add Node Group 에서 생성.

- 최소 인스턴스 성능은 small 이다.

- Argo CD등 추가하려면 Node 2개 이상 할 것. (t3.small 기준)

- EKS Kubectl 연결 및 검증

- 연결 및 검증

- 연결

aws eks update-kubeconfig --region **{aws-region}** --name **{eks-cluster-name}**- 검증

// Node 목록 확인 kubectl get nodes

- 연결 및 검증

- ECR 셋팅 및 이미지 업로드 진행

- Kubernetes Resource 작성 (Deployment + Service)

- 기본 템플릿 예시

# k8s/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: spring-app

spec:

replicas: 1

selector:

matchLabels:

app: spring-app

template:

metadata:

labels:

app: spring-app

spec:

containers:

- name: spring-container

image: 211125374993.dkr.ecr.ap-southeast-1.amazonaws.com/eks-test-ecr:latest

ports:

- containerPort: 8080

imagePullPolicy: Always

# k8s/service.yaml

apiVersion: v1

kind: Service

metadata:

name: spring-app-service

spec:

type: LoadBalancer

selector:

app: spring-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

- 적용하기 및 접속하기

# 적용

kubectl apply -f deployment.yaml

kubectl apply -f service.yaml

# 접속

kubectl get svc 입력 후, EXTERNAL-IP 에 접속하여 확인🌈 Kubernetes - EKS에 CICD 셋팅을 진행하자

| 구성 | 기능 |

| Github Action (CI) | Github Push → ECR에 이미지 등록 (CI) |

| ArgoCD (CD) | 위 과정을 거치면, Helm Repository에 Sed 명령어를 통해 Commit을 생성하고, 이를 감지하여 배포를 진행한다 (CD) |

| Helm | Service, Deployment, pv, pvc 등 배포와 관련된 내용을 yaml 파일로 관리한다. |

EKS with Github Action (CI) 셋팅하기

사전 준비사항

- AWS_ACCESS_KEY_ID

- AWS_SECRET_ACCESS_KEY

- AWS_REGION 정보

- AWS_ACCOUNT_ID

1. .github/workflow/deploy.yml을 작성, (위 값들은 Github Secret에 등록이 필요하다)

name: Deploy Spring App to ECR

on:

push:

branches:

- main

jobs:

build_and_push:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v2

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ secrets.AWS_REGION }}

- name: Login to Amazon ECR

id: ecr-login

uses: aws-actions/amazon-ecr-login@v1

- name: Build, tag, and push image to ECR

env:

AWS_REGION: ${{ secrets.AWS_REGION }}

AWS_ACCOUNT_ID: ${{ secrets.AWS_ACCOUNT_ID }}

run: |

docker build -t $AWS_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/eks-test-ecr:latest .

docker tag $AWS_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/eks-test-ecr:latest \

$AWS_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/eks-test-ecr:${{ github.sha }}

docker push $AWS_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/eks-test-ecr:latest

docker push $AWS_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/eks-test-ecr:${{ github.sha }}

- name: Update Kubernetes deployment yaml

env:

AWS_REGION: ${{ secrets.AWS_REGION }}

AWS_ACCOUNT_ID: ${{ secrets.AWS_ACCOUNT_ID }}

run: |

export NEW_IMAGE="$AWS_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/eks-test-ecr:${{ github.sha }}"

echo "New Image: $NEW_IMAGE"

sed -i "s|image:.*|image: $NEW_IMAGE|" ./k8s/deployment.yaml

- name: Push updated deployment.yaml

run: |

git config user.name "GitHub Actions"

git config user.email "actions@github.com"

git add ./k8s/deployment.yaml

git commit -m "Update image to ${{ github.sha }}"

git push

2. 검증

- Github 에 push 해 커밋을 올렸을 때, ECR에 이미지 생성되면 성공!

EKS with ArgoCD (CD) 셋팅하기

ArgoCD는 Github와 연동하여, Helm Deployment Script를 읽고 이를 자동 배포(Continuous Deployment) 해주는 역할을 수행한다. 사전에 Github Access Token이 필요하다.

1. 쿠버네티스에 ArgoCD 네임스페이스 생성

$ kubectl create namespace argocd

2. 쿠버네티스에 ArgoCD 설치

$ kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

3. 검증

kubectl get pods -n argocd

# Pending인 경우 원인 확인

kubectl describe pod {pod name} -n argocd

# ArgoCD를 443 -> LocalHost 8080으로 포트포워딩

kubectl port-forward svc/argocd-server -n argocd 8080:443

# ArgoCD를 초기 비밀번호 확인 (비밀번호 리턴됨)

kubectl get secret argocd-initial-admin-secret -n argocd -o jsonpath="{.data.password}" | base64 --decode

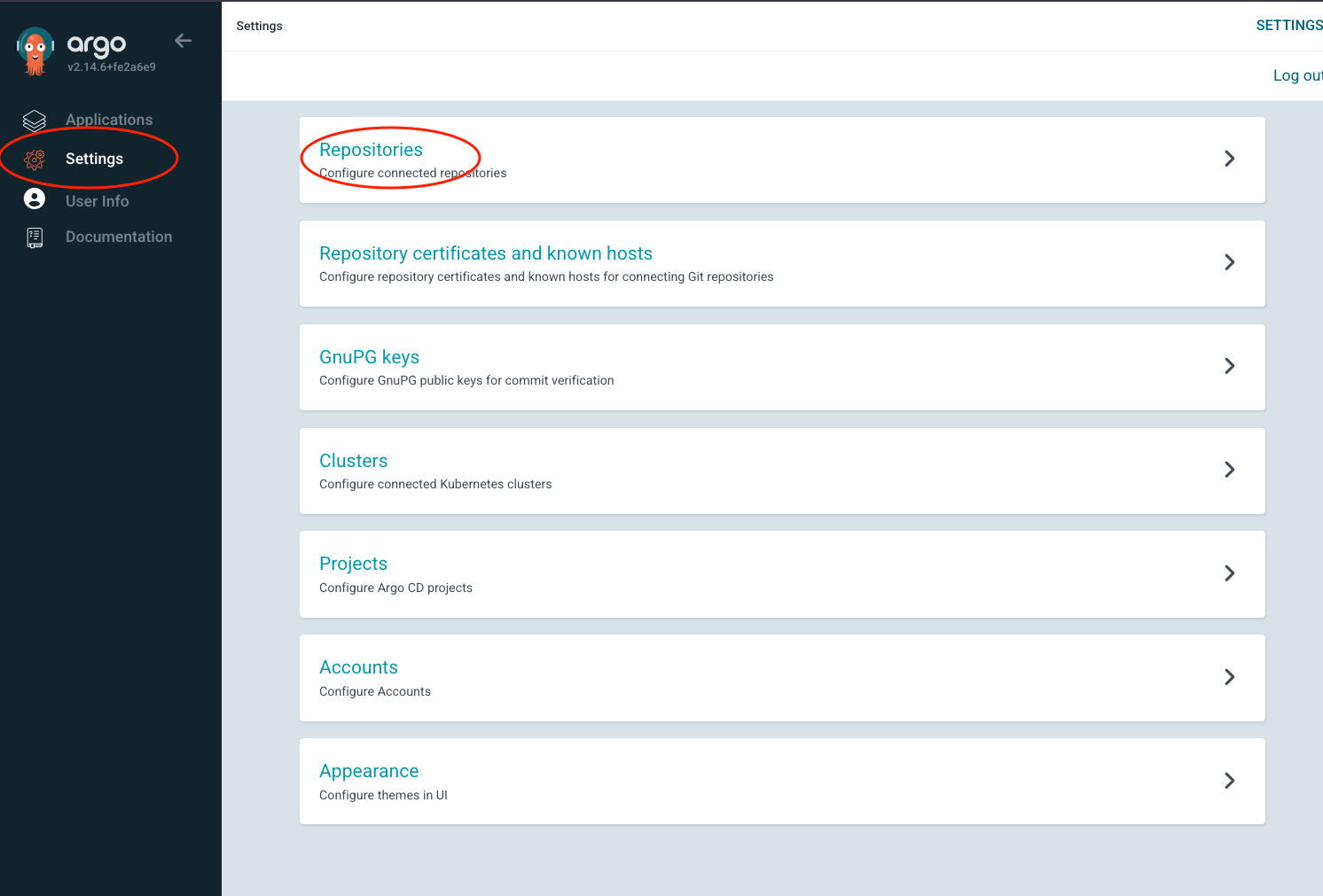

4. ArgoCD에 Github 를 등록하자 (커밋을 확인하려면 접근권한이 있어야하지 않겠는가?)

- ArgoCD에 접속하여 Settings - Repository Tab에서 진행

- Github Secret Token 필요 (Username & Secret 작성)

- 아래처럼 Connection 목록 뜨면 성공

5. ArgoCD에 Application 등록 (Continuous Deployment를 수행할 Application 대상을 등록한다)

- Applications - + New App 버튼 클릭해서 생성 시작하고, 아래 명령어를 입력 후, Create 버튼을 눌러 생성한다.

| Application Name | spring-server (자유롭게) |

| Project | default |

| Sync Policy | Manual (수동배포) || Auto (자동배포) |

| Prune Propagation Policy | background |

| Repository URL | 위 연결한 Github 저장소 클릭 |

| Revision (브랜치명) | main |

| Path (k8s 스크립트 폴더 명) | k8s |

| Cluster | https://kubernetes.default.svc (EKS 내부 기본 클러스터 주소) |

| Namespace | default || 원하는 네임스페이스 (나의 앱이 배포될 네임스페이스) |

6. ArgoCD를 외부 접근이 가능하게 ClusterIP -> LoadBalancer 타입으로 변경한다.

# 변경하기

$ kubectl patch svc argocd-server -n argocd -p '{"spec": {"type": "LoadBalancer"}}'

# 검증

$ kubectl get svc -n argocd

*기본적으로 별도의 설정이 없다면, ArgoCD는 깃허브의 Deployment.yaml 파일의 변동사항을 3분 마다 Polling해 변경사항을 확인하고 동기화한다.

🌈 Helm 설정 (Yaml 파일 관리)

- 사전준비사항

- Helm 용 Github Repository 생성 (ex> test-infra)

- Helm 설치

// 설치확인

$ helm version

// 설치 MAC

$ brew install helm

Helm 초기화

$ helm create {앱 명칭}

Helm Chart 폴더 구조 (참고용)

| Chart.yaml | Chart 이름, 버전 등 메타 정보 |

| values.yaml | 사용자 설정 값 (image, env 등) 관리 |

| charts/ | 종속 차트 (dependency) 폴더 |

| templates/deployment.yaml | 애플리케이션 Deployment 리소스 생성 (컨테이너 스펙) |

| templates/service.yaml | 서비스(Service) 리소스 생성 (네트워크 연결) |

| templates/ingress.yaml | Ingress 리소스 생성 (도메인 연결용) |

| templates/hpa.yaml | Horizontal Pod Autoscaler (자동 스케일링) 리소스 |

| templates/serviceaccount.yaml | ServiceAccount 리소스 생성 (권한 부여), AWS IAM 연동 시 필요 |

| templates/_helpers.tpl | 템플릿 공통 함수 (Label, 이름 등 재사용) |

| templates/NOTES.txt | 설치 결과 안내문, 없어도 무관 |

| templates/tests/test-connection.yaml | 헬름 테스트 리소스 (helm test 용) |

기본 헬름 템플릿 작성해보기

# 📁 templates/deployment.yaml

apiVersion: apps/v1 ### 버전정보

kind: Deployment ### Deployment Type 명시

### 이름 설정 (Kubectl에서 관리할 때 식별자)

metadata:

name: spring-app

spec:

replicas: {{ .Values.replicaCount }}

selector:

matchLabels:

app: spring-app

template:

metadata:

labels:

app: spring-app

spec:

containers:

- name: spring-container

image: "{{ .Values.image.repository }}:{{ .Values.image.tag }}"

ports:

- containerPort: 8080

env:

{{- range $key, $value := .Values.env }}

- name: {{ $key }}

value: "{{ $value }}"

{{- end }}

imagePullPolicy: Always

# 📁 templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: spring-app

spec:

selector:

app: spring-app

type: {{ .Values.service.type }}

ports:

- protocol: TCP

port: {{ .Values.service.port }}

targetPort: 8080

# 📁 Chart.yaml

apiVersion: v2

name: spring-app

description: A Helm chart for Kubernetes

# A chart can be either an 'application' or a 'library' chart.

#

# Application charts are a collection of templates that can be packaged into versioned archives

# to be deployed.

#

# Library charts provide useful utilities or functions for the chart developer. They're included as

# a dependency of application charts to inject those utilities and functions into the rendering

# pipeline. Library charts do not define any templates and therefore cannot be deployed.

type: application

# This is the chart version. This version number should be incremented each time you make changes

# to the chart and its templates, including the app version.

# Versions are expected to follow Semantic Versioning (https://semver.org/)

version: 0.1.0

# This is the version number of the application being deployed. This version number should be

# incremented each time you make changes to the application. Versions are not expected to

# follow Semantic Versioning. They should reflect the version the application is using.

# It is recommended to use it with quotes.

appVersion: "1.16.0"

# 📁 values.yaml

# Default values for spring-app.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

### 이미지 레지스트리 값 설정

image:

repository: 211125374993.dkr.ecr.ap-southeast-1.amazonaws.com/eks-test-ecr

tag: latest

### 배포할 POD 갯수 설정

replicaCount: 1

### 서비스 타입 설정

service:

type: LoadBalancer

port: 80

### 컨테이너 환경변수

env:

SPRING_PROFILES_ACTIVE: "prod"

AWS_REGION: "ap-southeast-1"

TEST_VALUE: "100"

위 과정에서 ArgoCD랑 Github Repository를 연결할 때 Application 과 연결한 것을 이제 Helm Repository로 변경해준다.

Github Action에서 Helm Repsoitory 를 Sed 명령어로 커밋하도록 설정한다.

- 푸시할 때 이미지도 Commit Hash로 진행하며,

- ArgoCD Deployment Script의 해시값도 커밋해시 값으로 변경쳐준다.

name: Deploy Spring App to ECR

on:

push:

branches:

- main

jobs:

build_and_push:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v2

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ secrets.AWS_REGION }}

- name: Login to Amazon ECR

id: ecr-login

uses: aws-actions/amazon-ecr-login@v1

- name: Build, tag, and push image to ECR

env:

AWS_REGION: ${{ secrets.AWS_REGION }}

AWS_ACCOUNT_ID: ${{ secrets.AWS_ACCOUNT_ID }}

run: |

COMMIT_HASH=$(echo $GITHUB_SHA | cut -c1-7)

docker build --no-cache -t $AWS_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/eks-test-ecr:latest .

docker tag $AWS_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/eks-test-ecr:latest \

$AWS_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/eks-test-ecr:$COMMIT_HASH

docker push $AWS_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/eks-test-ecr:latest

docker push $AWS_ACCOUNT_ID.dkr.ecr.$AWS_REGION.amazonaws.com/eks-test-ecr:$COMMIT_HASH

- name: Update Helm values.yaml in Helm Repo

env:

GH_HELM_REPO_PAT: ${{ secrets.GH_HELM_REPO_PAT }}

run: |

COMMIT_HASH=$(echo $GITHUB_SHA | cut -c1-7)

git clone https://x-access-token:${GH_HELM_REPO_PAT}@github.com/MG8-Project/eks-test-infra.git

cd eks-test-infra/spring-app

sed -i "s/tag: .*/tag: $COMMIT_HASH/" values.yaml

git config user.name "github-actions"

git config user.email "actions@github.com"

git commit -am "Update image tag to $COMMIT_HASH"

git push https://x-access-token:${GH_HELM_REPO_PAT}@github.com/MG8-Project/eks-test-infra.git HEAD:main

설정은 끝났다 !

현재까지 구성한 구조를 정리해보자.

1. Application Repository에 Code Commit이 이루어진다.

2. GIthub Action Build in ECR 과정을 거쳐, Docker로 말아서 ECR 이미지 레지스트리에 저장된다.

3. 이후, Github Action은 Helm Repository에 Sed 명령어를 통해 커밋을 날린다.

4. ArgoCD는 Helm Repository를 바라보고 있다가, 위 커밋 트리거를 받고 Deployment Script를 읽어 등록된 Application에 배포한다.

아래는, Helm에 Ingress 설정을 통해 원하는 도메인으로 등록하는 방법이다.

필요시 진행하자 ! 아마 대체로 필요할 것으로 예상되어 한 포스팅에 포함했다.

🌈 Helm - Ingress (도메인 & Https설정)

Ingress 는 외부 요청을 클러스터 내부의 서비스에서 어떻게 처리할지 정해둔 규칙의 모음이며 트래픽 로드밸런싱, SSL 인증서처리, 도메인기반 가상 호스팅 제공 등의 역할을 수행한다.

Ingress Controller는 Ingress가 동작하기 위해 클러스터에서 실행되고 요청에 따라 처리하는 컨트롤러 프로그램이다, 즉 Ingress는 트래픽 처리 규칙(Rule)이고, Ingress Controller는 실제 라우팅 수행 프로그램이다.

1. EKS ctl 설치

- Ingress Controller 는 AWS Type, Nginx Type등 여러가지가 있으나, AWS LoadBalancer 사용을 위해 aws-load-balancer-webhook-service를 사용

$ brew tap weaveworks/tap

$ brew install weaveworks/tap/eksctl

2. IAM Role 생성

- AmazonEKSLoadBalancerControllerRole (IAM 정책에 아래 권한을 복붙)

3. Service Account 생성 (IRSA, IAM Roles for Service Account)

*Service Account란?

Kubernetes에서 Pod가 서버에 접근 시 사용하는 계정으로, 기본적으로 모든 Pod는 default Service Account를 소유함. 따라서, 외부 리소스(AWS)에 접근하기 위한 적절한 권한이 필요하므로 연결하는 것

eksctl create iamserviceaccount \

--cluster eks-test-cluster \

--namespace kube-system \

--name aws-load-balancer-controller \ # 서비스 어카운트 이름

--attach-role-arn arn:aws:iam::<ACCOUNT_ID>:role/AmazonEKSLoadBalancerControllerRole \

--approve

4. Helm을 통해 aws-load-balancer-controller 설치

클러스터명, 리전, vpc, vpcId, 위에서 생성한 service account 를 붙여줘야한다.

helm install aws-load-balancer-controller eks/aws-load-balancer-controller \

-n kube-system \

--set clusterName=eks-test-cluster \

--set region=ap-southeast-1 \

--set vpcId=vpc-0fa9b98d41c276bdf \

--set serviceAccount.name=aws-load-balancer-controller

### 이미 존재하는 경우 Service Account Update

helm upgrade --install aws-load-balancer-controller eks/aws-load-balancer-controller \

-n kube-system \

--set clusterName=eks-test-cluster \

--set region=ap-southeast-1 \

--set vpcId=vpc-0fa9b98d41c276bdf \

--set serviceAccount.name=aws-load-balancer-controller \

--set serviceAccount.create=false

### 검증 -> 내가 설정한 serviceAccount 가 정상 출력되는지 확인한다.

kubectl get pod -n kube-system -l app.kubernetes.io/name=aws-load-balancer-controller -o jsonpath="{.items[0].spec.serviceAccountName}"

### 수정 및 재적용 시 (restart aws-load-balancer-controller)

kubectl rollout restart deployment aws-load-balancer-controller -n kube-system

### 로그확인

kubectl logs -n kube-system deployment/aws-load-balancer-controller

5. Helm Chart 에서 Application 용 Ingress 작성

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: spring-app-ingress

annotations:

alb.ingress.kubernetes.io/group.name: shared-alb-group

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/certificate-arn: {{ .Values.ingress.certificateArn }}

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP":80,"HTTPS":443}]'

alb.ingress.kubernetes.io/ssl-redirect: '443'

alb.ingress.kubernetes.io/backend-protocol: HTTP

alb.ingress.kubernetes.io/healthcheck-path: /health # LoadBalancer Health Check

spec:

ingressClassName: alb

rules:

- host: {{ .Values.ingress.host }}

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: spring-app

port:

number: 806. ArgoCD용 Ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: argocd-server-ingress

namespace: argocd

annotations:

alb.ingress.kubernetes.io/group.name: shared-alb-group

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:ap-southeast-1:211125374993:certificate/9382fcc5-245e-46be-a1ec-c36189171073 # ACM 인증서 ARN

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP":80,"HTTPS":443}]'

alb.ingress.kubernetes.io/force-ssl-redirect: 'true'

alb.ingress.kubernetes.io/backend-protocol: HTTP

alb.ingress.kubernetes.io/healthcheck-path: /login

alb.ingress.kubernetes.io/success-codes: '200-399'

spec:

ingressClassName: alb

rules:

- host: test-argo.luckypanda.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: argocd-server

port:

number: 807. 적용하기

### 스크립트 적용

$ kubectl apply -f {파일경로}

### 서버 리스타트

$ rollout restart deployment -n {네임스페이스} {서버명}

정말 끝.!

진행 시 만나게 될 오류에 대하여..

(Err) ArgoCD - err_too_many_redirects 오류 해결하기

- ArgoCD를 Https를 연결해 브라우저에서 확인하면 too_many_redirect 오류가 발생할 수 있다.

- 원인

- ALB의 리디렉션과 ArgoCD 리디렉션이 겹쳐서 발생

- https → ALB → HTTP → ArgoCD → 다시 HTTPS 리디렉션 → ALB → …

- → 무한 반복 리디렉션 발생

- 해결방안

- ConfigMap의 insecure : true 설정 추가

- ArgoCD가 HTTPS 리디렉션을 하지 않도록 설정

- ConfigMap 수정하기

- ConfigMap의 insecure : true 설정 추가

### 수정 vi

kubectl edit configmap argocd-cmd-params-cm -n argocd

### 추가 필요

data:

server.insecure: "true"# ArgoCD Server 재시작하여 적용하기

kubectl rollout restart deployment argocd-server -n argocd'Web Development > Back-end' 카테고리의 다른 글

| EKS - 환경변수 관리 (1) | 2025.07.21 |

|---|---|

| EKS - Context (0) | 2025.07.21 |

| 동기 비동기 & 직렬 & 동시성에 대하여 (0) | 2025.07.21 |

| ECS 사용기 (3) | 2025.07.21 |

| Docker 에 대하여 정리 (4) | 2025.07.21 |